吴裕雄--天生自然 PYTHON数据分析:基于Keras的CNN分析太空深处寻找系外行星数据

#We import libraries for linear algebra, graphs, and evaluation of results

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import roc_curve, roc_auc_score

from scipy.ndimage.filters import uniform_filter1d

#Keras is a high level neural networks library, based on either tensorflow or theano

from keras.models import Sequential, Model

from keras.layers import Conv1D, MaxPool1D, Dense, Dropout, Flatten, BatchNormalization, Input, concatenate, Activation

from keras.optimizers import Adam

INPUT_LIB = 'F:\\kaggleDataSet\\kepler-labelled\\'

raw_data = np.loadtxt(INPUT_LIB + 'exoTrain.csv', skiprows=1, delimiter=',')

x_train = raw_data[:, 1:]

y_train = raw_data[:, 0, np.newaxis] - 1.

raw_data = np.loadtxt(INPUT_LIB + 'exoTest.csv', skiprows=1, delimiter=',')

x_test = raw_data[:, 1:]

y_test = raw_data[:, 0, np.newaxis] - 1.

del raw_data

x_train = ((x_train - np.mean(x_train, axis=1).reshape(-1,1))/ np.std(x_train, axis=1).reshape(-1,1))

x_test = ((x_test - np.mean(x_test, axis=1).reshape(-1,1)) / np.std(x_test, axis=1).reshape(-1,1))

x_train = np.stack([x_train, uniform_filter1d(x_train, axis=1, size=200)], axis=2)

x_test = np.stack([x_test, uniform_filter1d(x_test, axis=1, size=200)], axis=2)

model = Sequential()

model.add(Conv1D(filters=8, kernel_size=11, activation='relu', input_shape=x_train.shape[1:]))

model.add(MaxPool1D(strides=4))

model.add(BatchNormalization())

model.add(Conv1D(filters=16, kernel_size=11, activation='relu'))

model.add(MaxPool1D(strides=4))

model.add(BatchNormalization())

model.add(Conv1D(filters=32, kernel_size=11, activation='relu'))

model.add(MaxPool1D(strides=4))

model.add(BatchNormalization())

model.add(Conv1D(filters=64, kernel_size=11, activation='relu'))

model.add(MaxPool1D(strides=4))

model.add(Flatten())

model.add(Dropout(0.5))

model.add(Dense(64, activation='relu'))

model.add(Dropout(0.25))

model.add(Dense(64, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

def batch_generator(x_train, y_train, batch_size=32):

"""

Gives equal number of positive and negative samples, and rotates them randomly in time

"""

half_batch = batch_size // 2

x_batch = np.empty((batch_size, x_train.shape[1], x_train.shape[2]), dtype='float32')

y_batch = np.empty((batch_size, y_train.shape[1]), dtype='float32') yes_idx = np.where(y_train[:,0] == 1.)[0]

non_idx = np.where(y_train[:,0] == 0.)[0] while True:

np.random.shuffle(yes_idx)

np.random.shuffle(non_idx) x_batch[:half_batch] = x_train[yes_idx[:half_batch]]

x_batch[half_batch:] = x_train[non_idx[half_batch:batch_size]]

y_batch[:half_batch] = y_train[yes_idx[:half_batch]]

y_batch[half_batch:] = y_train[non_idx[half_batch:batch_size]] for i in range(batch_size):

sz = np.random.randint(x_batch.shape[1])

x_batch[i] = np.roll(x_batch[i], sz, axis = 0) yield x_batch, y_batch

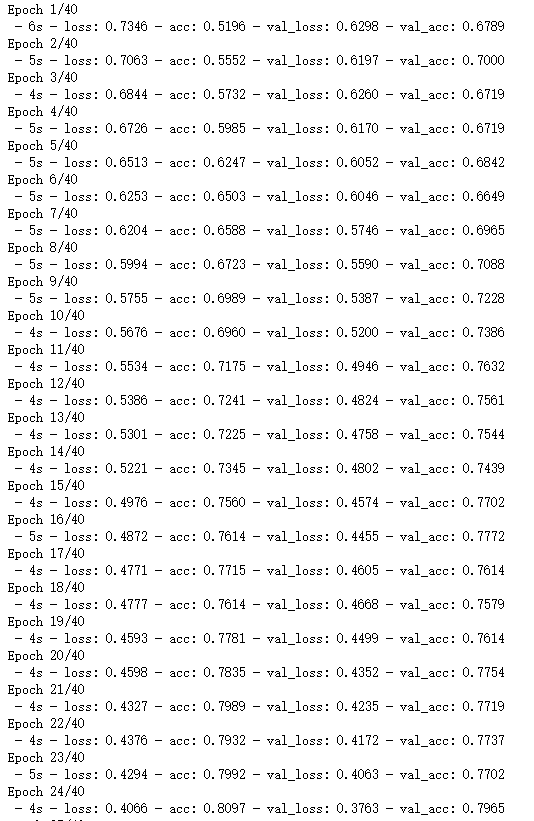

#Start with a slightly lower learning rate, to ensure convergence

model.compile(optimizer=Adam(1e-5), loss = 'binary_crossentropy', metrics=['accuracy'])

hist = model.fit_generator(batch_generator(x_train, y_train, 32),

validation_data=(x_test, y_test),

verbose=0, epochs=5,

steps_per_epoch=x_train.shape[1]//32)

#Then speed things up a little

model.compile(optimizer=Adam(4e-5), loss = 'binary_crossentropy', metrics=['accuracy'])

hist = model.fit_generator(batch_generator(x_train, y_train, 32),

validation_data=(x_test, y_test),

verbose=2, epochs=40,

steps_per_epoch=x_train.shape[1]//32)

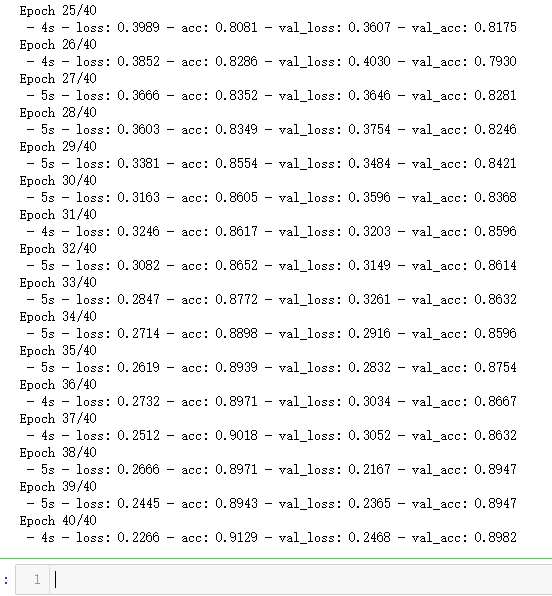

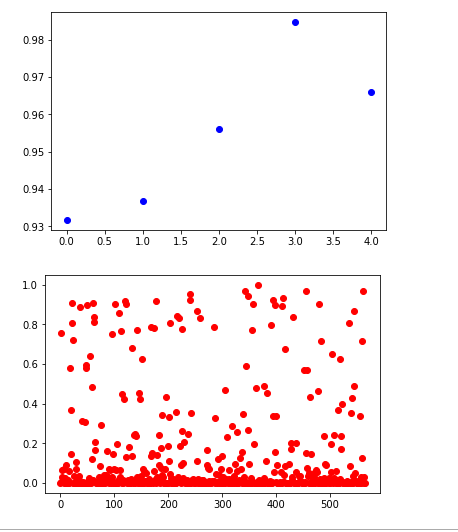

plt.plot(hist.history['loss'], color='b')

plt.plot(hist.history['val_loss'], color='r')

plt.show()

plt.plot(hist.history['acc'], color='b')

plt.plot(hist.history['val_acc'], color='r')

plt.show()

non_idx = np.where(y_test[:,0] == 0.)[0]

yes_idx = np.where(y_test[:,0] == 1.)[0]

y_hat = model.predict(x_test)[:,0]

plt.plot([y_hat[i] for i in yes_idx], 'bo')

plt.show()

plt.plot([y_hat[i] for i in non_idx], 'ro')

plt.show()

y_true = (y_test[:, 0] + 0.5).astype("int")

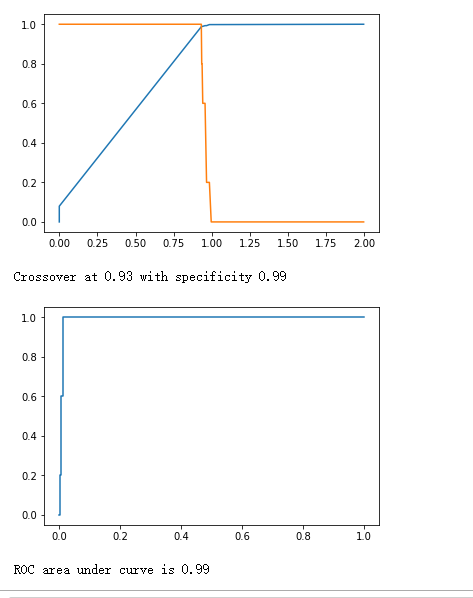

fpr, tpr, thresholds = roc_curve(y_true, y_hat)

plt.plot(thresholds, 1.-fpr)

plt.plot(thresholds, tpr)

plt.show()

crossover_index = np.min(np.where(1.-fpr <= tpr))

crossover_cutoff = thresholds[crossover_index]

crossover_specificity = 1.-fpr[crossover_index]

print("Crossover at {0:.2f} with specificity {1:.2f}".format(crossover_cutoff, crossover_specificity))

plt.plot(fpr, tpr)

plt.show()

print("ROC area under curve is {0:.2f}".format(roc_auc_score(y_true, y_hat)))

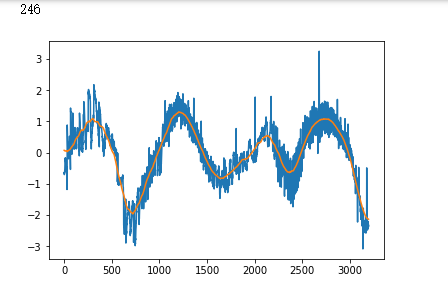

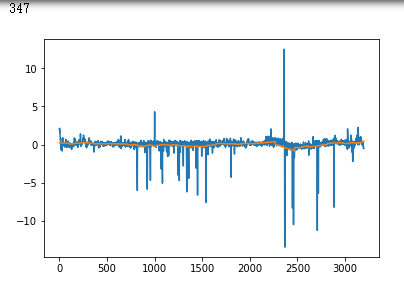

false_positives = np.where(y_hat * (1. - y_test) > 0.5)[0]

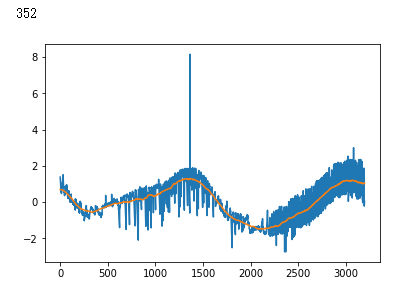

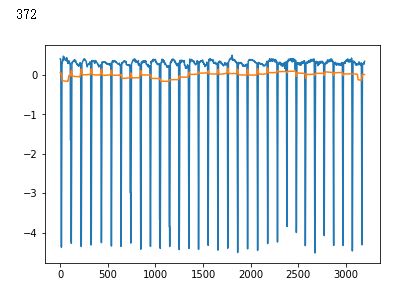

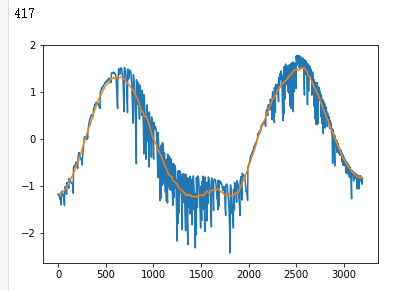

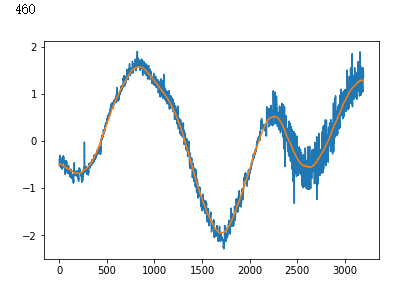

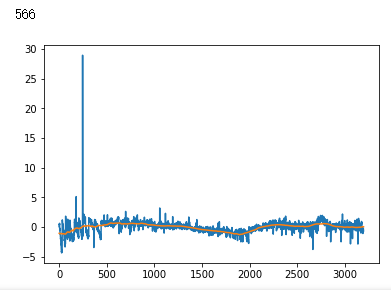

for i in non_idx:

if y_hat[i] > crossover_cutoff:

print(i)

plt.plot(x_test[i])

plt.show()

吴裕雄--天生自然 PYTHON数据分析:基于Keras的CNN分析太空深处寻找系外行星数据的更多相关文章

- 吴裕雄--天生自然 python数据分析:健康指标聚集分析(健康分析)

# This Python 3 environment comes with many helpful analytics libraries installed # It is defined by ...

- 吴裕雄--天生自然 PYTHON数据分析:钦奈水资源管理分析

df = pd.read_csv("F:\\kaggleDataSet\\chennai-water\\chennai_reservoir_levels.csv") df[&quo ...

- 吴裕雄--天生自然 python数据分析:基于Keras使用CNN神经网络处理手写数据集

import pandas as pd import numpy as np import matplotlib.pyplot as plt import matplotlib.image as mp ...

- 吴裕雄--天生自然 PYTHON数据分析:糖尿病视网膜病变数据分析(完整版)

# This Python 3 environment comes with many helpful analytics libraries installed # It is defined by ...

- 吴裕雄--天生自然 PYTHON数据分析:所有美国股票和etf的历史日价格和成交量分析

# This Python 3 environment comes with many helpful analytics libraries installed # It is defined by ...

- 吴裕雄--天生自然 python数据分析:葡萄酒分析

# import pandas import pandas as pd # creating a DataFrame pd.DataFrame({'Yes': [50, 31], 'No': [101 ...

- 吴裕雄--天生自然 PYTHON数据分析:人类发展报告——HDI, GDI,健康,全球人口数据数据分析

import pandas as pd # Data analysis import numpy as np #Data analysis import seaborn as sns # Data v ...

- 吴裕雄--天生自然 python数据分析:医疗费数据分析

import numpy as np import pandas as pd import os import matplotlib.pyplot as pl import seaborn as sn ...

- 吴裕雄--天生自然 PYTHON数据分析:医疗数据分析

import numpy as np # linear algebra import pandas as pd # data processing, CSV file I/O (e.g. pd.rea ...

随机推荐

- PAT A1133 Splitting A Linked List (25) [链表]

题目 Given a singly linked list, you are supposed to rearrange its elements so that all the negative v ...

- PAT Advanced 1049 Counting Ones (30) [数学问题-简单数学问题]

题目 The task is simple: given any positive integer N, you are supposed to count the total number of 1 ...

- Linux中的错误重定向你真的懂吗

在很多定时任务里.shell里我们往往能看到 "2>&1",却不知道这背后的原理. 举个例子: * 1 * * * test.sh > /dev/null 2& ...

- linux中awk的应用

1.awk的基本认识和使用方法,参考下面链接 https://www.cnblogs.com/timxgb/p/4658631.html 2.awk中关于条件判断的用法,如 https://blog. ...

- Java8必知必会

Java SE 8添加了2个对集合数据进行批量操作的包: java.util.function 包以及 java.util.stream 包. 流(stream)就如同迭代器(iterator),但附 ...

- windows10系统激活方法

我使用的是第一种方法,很好用,企业版 https://blog.csdn.net/qq_39146974/article/details/82967054

- 【网络流】One-Way Roads

[网络流]One-Way Roads 题目描述 In the country of Via, the cities are connected by roads that can be used in ...

- The website is API(1)

Requests 自动爬取HTML页面 自动网路请求提交 robots 网络爬虫排除标准 Beautiful Soup 解析HTML页面 实战 Re 正则表达式详解提取页面关键信息 Scrapy*框架 ...

- Q_Go2

一.变量 1.1 变量的概念 变量是计算机语言中存储数据的抽象概念.变量的功能是存储数据.变量通过变量名访问. 变量的本质是计算机分配的一小块内存,专门用于存放指定数据,在程序运行过程中该数值可以发生 ...

- 2019-2020-1 20199324《Linux内核原理与分析》第四周作业

第三章 MenuOs的构造 一.知识点总结 计算机的三大法宝: 存储程序计算机 函数调用堆栈 中断 操作系统的两把宝剑: 中断上下文的切换(保存现场和恢复现场) 进程上下文的切换 它们都和汇编语言有着 ...